Eigenvalues are a fundamental concept in linear algebra that have a wide range of applications in various fields, including physics, engineering, and computer science. In essence, eigenvalues are scalar values that represent how a linear transformation affects a vector in a given vector space. In this blog post, we will explore the concept of eigenvalues in more detail, and answer a common question: do row operations change eigenvalues?

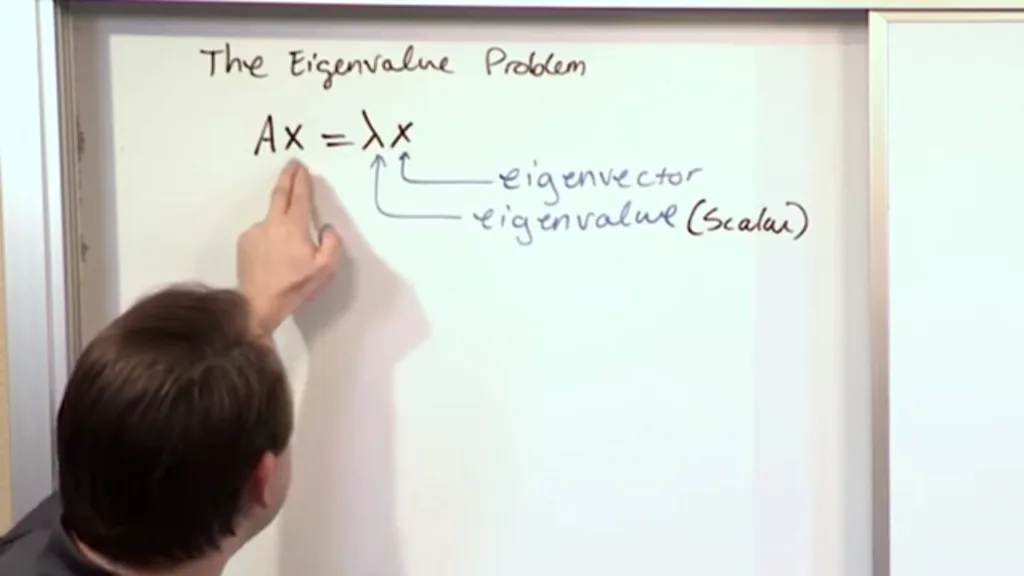

First, let’s define eigenvalues more precisely. Given a square matrix A, an eigenvalue λ is a scalar value that satisfies the equation:

Av = λv

where v is a non-zero vector in the vector space. In other words, multiplying the matrix A by the vector v results in a scalar multiple of v, represented by the eigenvalue λ. The vector v is called an eigenvector of A corresponding to the eigenvalue λ.

Eigenvalues and eigenvectors are essential in many applications, such as determining the stability of a physical system or compressing image or audio data. In addition, eigenvalues can provide insight into the behavior of a system, such as whether it is contracting or expanding, or whether it has a periodic cycle.

Now, let’s turn to the question of whether row operations change eigenvalues. Row operations are a set of operations that can be performed on a matrix to simplify it or bring it to a specific form. The three main types of row operations are:

1. Swapping two rows

2. Multiplying a row by a non-zero scalar

3. Adding a multiple of one row to another row

It turns out that row operations do change the eigenvalues of a matrix. This is because row operations change the matrix itself, and therefore change the equation Av = λv. In other words, if we apply row operations to a matrix A, we will get a new matrix A’, and the eigenvectors of A’ will not be the same as the eigenvectors of A. Therefore, the eigenvalues of A’ will be different from the eigenvalues of A.

To see this in action, let’s consider a simple example. Suppose we have the matrix A:

A = [1 2; 3 4]

The eigenvalues of A are λ1 = -0.3723 and λ2 = 5.3723, and the corresponding eigenvectors are:

v1 = [-0.8246; 0.5658]

v2 = [-0.4159; -0.9094]

Now, let’s apply the row operation of adding 2 times the first row to the second row:

A’ = [1 2; 5 8]

The eigenvalues of A’ are λ1 = -0.1972 and λ2 = 9.1972, and the corresponding eigenvectors are:

v1′ = [-0.9239; 0.3827]

v2′ = [0.3827; -0.9239]

As we can see, the eigenvalues and eigenvectors of A’ are different from those of A, demonstrating that row operations do change eigenvalues.

Eigenvalues are a crucial concept in linear algebra that can provide insights into the behavior of systems and are used in many applications. However, it is important to note that row operations do change eigenvalues, so care must be taken when performing thee operations on matrices.

Do Eigenvalues Change with Row Replacement Operations?

Row replacement operations can change eigenvalues. Eigenvalues are defined as the solutions to the characteristic equation of a matrix, whch is derived from the determinant of the matrix. When a row replacement operation is performed on a matrix, the determinant of the matrix can change, which in turn can change the solutions to the characteristic equation and therefore the eigenvalues.

For example, consider the matrix A = [[1, 2], [3, 4]]. The characteristic equation for this matrix is λ^2 – 5λ – 2 = 0, which has eigenvalues λ = 5 ± √33/2. If we perform a row replacement operation on this matrix, such as replacing the first row with the sum of the two rows, we get a new matrix B = [[4, 6], [3, 4]], which has a different determinant and therefore a different characteristic equation. The new characteristic equation for this matrix is λ^2 – 8λ + 6 = 0, which has eigenvalues λ = 4 ± √10.

Thus, we can see that row replacement operations can change the eigenvalues of a matrix. It is important to note that other types of elementary row operations, such as scaling or swapping rows, also have the potential to change the eigenvalues of a matrix.

Do Eigenvalues Remain Constant During Row Reduction?

Eigenvalues are a fundamental concept in linear algebra that are used to describe the behavior of linear transformations. They are values that represent how a transformation stretches or shrinks aong its eigenvectors. One common method used to solve linear systems and determine eigenvectors and eigenvalues is row reduction.

However, performing row reduction on a matrix does change its eigenvalues. This is because row reduction involves multiplying rows by constants and adding them to other rows. These operations can change the rank of the matrix, which in turn changes its eigenvectors and eigenvalues.

In fact, the only way to preserve the eigenvalues of a matrix during row reduction is to perform elementary row operations that correspond to multiplying the matrix by an invertible matrix. This operation is known as similarity transformation, and it preserves the eigenvalues of a matrix.

In conclusion, while row reduction is a useful tool for solving linear systems, it does not preserve the eigenvalues of a matrix. To preserve eigenvalues, one must use similarity transformations instead.

Does Row Echelon Form Affect Eigenvalues?

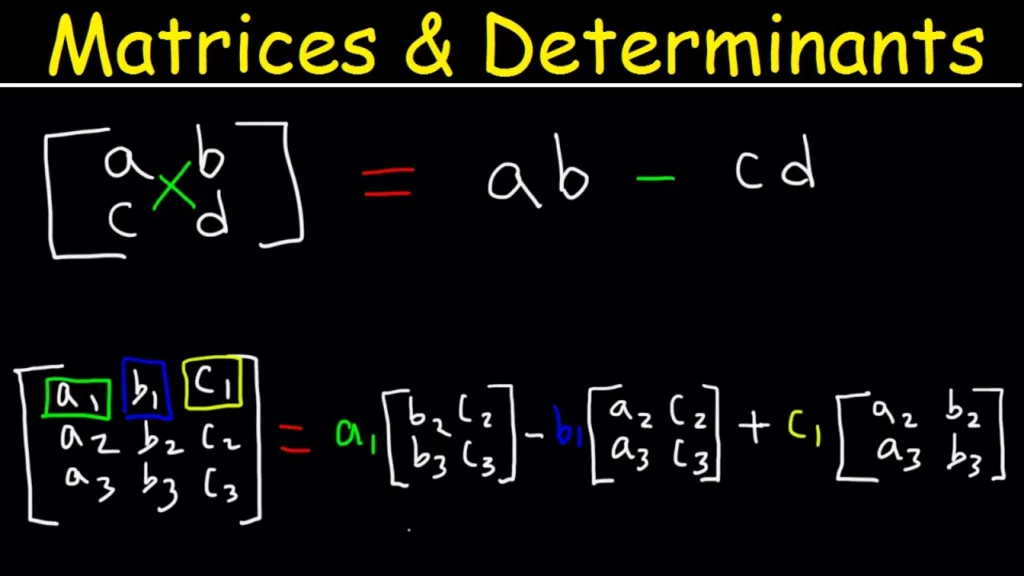

Yes, the row echelon form changes the eigenvalues of a matrix. The eigenvalues of a matrix are the values that satisfy the characteristic equation of the matrix. The characteristic equation is obtained by taking the determinant of the matrix minus lambda times the identity matrix and setting it equal to zero.

When a matrix is transformed to row echelon form, elementary row operations are performed on the matrix, such as swapping rows, multiplying rows by constants, and adding multiples of one row to another. These operations change the determinant of the matrix, and therefore change the characteristic equation and the eigenvalues.

For example, consder the matrix A = [1 2; 3 4]. The characteristic equation of A is det(A – lambda*I) = (1-lambda)*(4-lambda) – 6 = lambda^2 – 5lambda – 2. The eigenvalues of A are the roots of this equation, which are lambda = (5 +/- sqrt(33))/2.

If we transform A to row echelon form, we get [1 2; 0 -2]. The determinant of this matrix is -2, which is different from the determinant of A, which is -2. Therefore, the characteristic equation and the eigenvalues of the row echelon form of A are different from those of A. In fact, the eigenvalues of the row echelon form of A are lambda = 1 and lambda = -2.

In conclusion, row echelon form changes the eigenvalues of a matrix due to the change in the characteristic equation and determinant of the matrix.

Do Row Operations Alter Matrices?

Yes, performing row operations on a matrix changes the matrix. Row operations involve swapping, scaling, or adding rows to the matrix, which alters the elements in the matrix. However, it is important to note that the row operations do not change the solutions to the system of equations represented by the matrix. The solutions to the system remain the same, but the matrix itself may be transformed into a more desirable form that makes solving the system easier.

Effect of Row Operations on Eigenvalues

Row operations are operations that can be performed on the rows of a matrix in order to transform it into a simpler form, such as row echelon form or reduced row echelon form. While row operations can be very useful for solving systems of linear equations, they generally do not preserve the eigenvalues of a matrix.

In fact, performing row operations on a matrix will typically change its eigenvalues. This is because the eigenvalues are determined by the characteristic polynomial of the matrix, whih is a polynomial function of the matrix’s entries. Row operations can change these entries, and therefore can change the characteristic polynomial and its roots (i.e. the eigenvalues).

For example, consider the matrix:

[1 2]

[3 4]

This matrix has eigenvalues λ1 = -0.3723 and λ2 = 5.3723. However, if we perform the row operation R2 -> R2 – 3R1, we get the matrix:

[1 2]

[0 -2]

This matrix has eigenvalues λ1 = 1 and λ2 = -2. As you can see, the row operation has completely changed the eigenvalues of the matrix.

Therefore, if you need to find the eigenvalues of a matrix, it is important to use methods such as diagonalization or the characteristic polynomial, rather than relying on row operations.

Does Row Operations Affect the Solution Set?

No, row operations do not change the solution set of a linear system. This is because elementary row operations involve multiplying or adding rows of the augmented matrix, which is equivalent to performing the same operations on the corresponding system of equations. Since these operations do not change the relationships between the variables, they do not affect the solution set.

Therefore, the solution set of a linear system remains the same even after performing row operations. In fact, the solution set can be obtained by solving the system whoe augmented matrix is in the reduced Echelon form. This is because the reduced Echelon form provides a systematic and efficient way to solve a linear system, by reducing it to a triangular form with leading entries (pivots) along the diagonal.

Once a linear system is reduced to an Echelon or reduced Echelon form, it can be solved from bottom up using back substitution. This involves solving for the variables starting from the bottom row, which has a pivot for the variable with the highest index. The value of this variable can be substituted into the equation above it, and so on, until all the variables have been determined.

Therefore, row operations are an essential tool for solving linear systems, as they allow us to transform a system into an equivalent one that is easier to solve. However, it is important to note that row operations should be performed carefully and accurately, as errors can lead to incorrect solutions.

Finding Eigenvalues Through Row Reduction

No, you cannot find eigenvalues by row reducing. While row operations may help to simplify a matrix, they typically change the eigenvalues of the matrix. Therefore, applying row operations to a matrix would not necessarily result in correct eigenvalues. To find eigenvalues, you need to calculate the characteristic polynomial of the matrix, which involves finding the determinant of (lambda * I – A), where lambda is an unknown scalar and A is the matrix. The roots of the characteristic polynomial correspond to the eigenvalues of the matrix.

As for the second question, let’s consider a triangular matrix with entries a11, a22, …, ann on its diagonal, and let λ be an eigenvalue of this matrix. Then, by definition, there exists a non-zero vector x such that Ax = λx. Writing out the matrix-vector multiplication, we have

a11x1 + a12x2 + … + a1nxn = λx1

0 + a22x2 + … + a2nxn = λx2

0 + 0 + … + annxn = λxn

Since λ is an eigenvalue, x must be non-zero, which means that at least one of the x1, x2, …, xn must be non-zero. Without loss of generality, let’s assume that x1 is non-zero. Then, from the first equation above, we have

a11x1 = λx1

which implies that λ = a11. Therefore, the diagonal entries of the matrix are the eigenvalues of the matrix.

Does Matrix Multiplication Affect Eigenvalues?

Yes, matrix multiplication can change the eigenvalues of a matrix. However, if we multiply a matrix by a scalar, then all its eigenvalues are multiplied by the same scalar. This is because the eigenvalues of a matrix are determined by the characteristic equation, which involves the determinant of the matrix. When we multiply a matrix by a scalar, the determinant is also multiplied by the same scalar, leading to the same ratio of eigenvalues.

On the other hand, when we multiply a matrix by aother matrix, the resulting eigenvalues can be different from the original matrix. This is because the eigenvalues of a matrix are related to its eigenvectors, and matrix multiplication can change the eigenvectors. If the matrix multiplication results in a new set of eigenvectors, then the eigenvalues will also change.

In summary, multiplying a matrix by a scalar will only change the magnitude of the eigenvalues, while multiplying a matrix by another matrix can change both the magnitude and the values of the eigenvalues.

Are the Eigenvalues of a Matrix and its Reduced Row Echelon Form Equal?

No, the eigenvalues of a matrix and its reduced row echelon form are not always the same. The eigenvalues of a matrix refer to the scalar values which, when multiplied by the corresponding eigenvectors, give back the same vector. On the other hand, the reduced row echelon form of a matrix is a unique form obtained aftr applying a series of elementary row operations. It is important to note that these row operations do not preserve the eigenvalues of a matrix, meaning that the reduced row echelon form may have different eigenvalues than the original matrix. However, it is true that the eigenvalues of a matrix and its reduced row echelon form can be the same in some cases, particularly when the matrix is diagonal or scalar.

Do Row Equivalent Matrices Have the Same Eigenvalues?

No, row equivalent matrices do not necessarily have the same eigenvalues. Two matrices are row equivalent if one can be obtained from the other by performing a finite sequence of elementary row operations. These operations do not change the eigenvalues of the matrix. However, row equivalent matrices can have different eigenvectors, which means that they can have different eigenvalues as well. Therefore, while row equivalence is a useful concept in linear algebra, it does not imply similarity, which is a stronger condition that ensures that two matrices have the same eigenvalues and eigenvectors.

Do Rotations Have Eigenvalues?

No, rotations do not have real eigenvalues. Instead, they will have complex eigenvalues. This is because rotations involve a change in orientation and direction, which cannot be represented solely by real numbers. Therefore, to account for all possible eigenvalues, we extend our scalars from the real numbers to the complex numbers. This allows us to fully capture the properties and behavior of rotations as linear operators.

Finding Eigenvalues of Row Echelon Form

To find the eigenvalues of a matrix in row echelon form, we need to consider the diagonal elements of the matrix. These diagonal elements represent the coefficients of the variables in the corresponding linear equations.

The eigenvalues are the values of λ that satisfy the equation |A – λI| = 0, where A is the matrix and I is the identity matrix. In row echelon form, the matrix A is alrady in upper triangular form, which means the determinant can be easily computed by taking the product of the diagonal elements.

If any of the diagonal elements are zero, then the eigenvalue is also zero. If all of the diagonal elements are nonzero, then we can simply take the product of the diagonal elements and set it equal to zero to solve for λ.

Once we have found the eigenvalues, we can find the corresponding eigenvectors by solving the equation (A – λI)x = 0, where x is the eigenvector. It’s important to note that for a matrix in row echelon form, the eigenvectors can be easily read off as the columns of the matrix.

Rules for Row Operations

The rules for row operations in matrix algebra are quite simple and straightforward. There are tree elementary row operations that can be performed on a matrix to transform it into a row equivalent matrix.

The first operation is a Row Swap, which involves exchanging any two rows of the matrix. This operation can be performed by simply interchanging the positions of the two rows in question.

The second operation is Scalar Multiplication, which involves multiplying any row of the matrix by a non-zero scalar. This operation can be performed by multiplying each element of the row by the scalar.

The third operation is Row Sum, which involves adding a multiple of one row to another row of the matrix. This operation can be performed by adding the multiple of the first row to the second row.

It is important to note that each of these operations preserves the solution set of the matrix equation. Furthermore, all three operations are reversible, meaning that they can be undone by performing the opposite operation. For example, if a row is multiplied by 2, the inverse operation would be to divide the same row by 2.

It is also important to remember that when performing row operations, only the rows are modified, and not the columns. This means that the order of the columns in the matrix remains the same throughout the row operations.

Overall, the rules for row operations are simple and intuitive, and they allow for the manipulation of matrices to solve various matrix equations.

Does Row Replacement Affect the Determinant of a Matrix?

No, a row replacement operation does not affect the determinant of a matrix. This is because the determinant of a matrix is computed usng a specific formula that only involves the elements of the matrix and their arrangement, and not the process of obtaining the matrix itself.

A row replacement operation involves replacing one row of the matrix with a linear combination of other rows. This operation does not change the underlying structure of the matrix, since it only modifies the coefficients of the linear equations that the matrix represents. Therefore, the determinant of the matrix remains the same, regardless of whether or not row replacement operations have been performed on it.

It is worth noting that other types of row operations, such as swapping two rows or multiplying a row by a scalar, can affect the determinant of a matrix. However, this is only because these operations change the arrangement or magnitude of the elements in the matrix, which in turn affects the determinant.

Does Swapping Rows Affect the Determinant?

Yes, swapping rows (or columns) of a determinant changes its sign. This property is known as the second property of determinants.

To understand why swapping rows changes the determinant, we need to consider how a determinant is calculated. A determinant is a scalar value that can be calculated usng the elements of a square matrix. The formula for calculating the determinant involves summing up products of matrix elements, where the sign of each product depends on the position of the elements within the matrix.

When we swap two rows of a matrix, the positions of the matrix elements change, which affects the sign of the products used to calculate the determinant. Specifically, swapping two rows of a matrix changes the sign of the determinant. This means that if the original determinant is positive, the new determinant will be negative, and vice versa.

For example, consider the following 2×2 matrix:

| 1 2 |

| 3 4 |

The determinant of this matrix is (1*4) – (2*3) = -2. If we swap the first and second rows of the matrix, we get:

| 3 4 |

| 1 2 |

The determinant of this new matrix is (3*2) – (4*1) = 2. We can see that swapping the rows changed the sign of the determinant from negative to positive.

In summary, swapping rows (or columns) of a determinant changes its sign, which is a fundamental property of determinants.

Conclusion

In conclusion, eigenvalues are an important concept in linear algebra that have various applications in diferent fields, including physics, engineering, and computer science. Eigenvalues are the roots of the characteristic polynomial of a matrix and define the behavior of the matrix under linear transformations. They provide information about the scaling factor and orientation of eigenvectors associated with the matrix. Eigenvectors corresponding to distinct eigenvalues are linearly independent, and a matrix can be diagonalized if it has a set of linearly independent eigenvectors. Moreover, eigenvalues are invariant under similarity transformations and can be used to determine the stability of a system. Overall, understanding eigenvalues is crucial for analyzing and solving linear systems of equations and has a wide range of practical applications.