In the world of computing, a byte is the smallest unit of data that can be manipulated. It is made up of eight bits and can represent a range of numerical values from 0 to 255. Bytes are used to store data, transfer data, and perform calculations in a computer system.

One of the most common uses of bytes is to represent characters in a computer system. In the early days of computing, characters were represented using 7-bit ASCII encoding. This meant that each character could be represented by a single byte. However, as computing technology evolved, it became necessary to support a wider range of characters, including those from non-Latin scripts such as Chinese and Arabic.

To support these characters, Unicode was developed. Unicode is a standard that defines a unique number for every character, regardless of the platform, program, or language. Unicode uses a variable-length encoding scheme, which means that each character can be represented by one or more bytes.

In Java, the ‘char’ data type is used to represent characters. The size of the char data type in Java is 2 bytes because it uses the Unicode standard. This means that each character in Java can be represented by up to 2 bytes.

On the other hand, C uses the ASCII character set, where each character can be represented by a single byte. In C, the character constant is an int type, which means that it takes up 4 bytes of memory.

It is worth noting that not all characters require the full 2 bytes to be represented in Java or even the single byte in C. For example, an ASCII character in 8-bit ASCII encoding is 8 bits or 1 byte, although it can fit in 7 bits. Similarly, an ISO-8895-1 character in ISO-8859-1 encoding is 8 bits or 1 byte.

The number of bytes required to represent a character depends on the encoding scheme used. In Java, characters use the Unicode standard and require 2 bytes, wile in C, ASCII characters require 1 byte. However, the actual number of bytes required to represent a character can vary depending on the specific character and the encoding used.

Size of a Character

In Java, the ‘char’ data type is used to represent a single character in the Unicode format. The Unicode standard assigns a unique number to every character, symbol, and punctuation mark in all the writing systems of the world. Java uses 16-bit Unicode encoding to represent characters, whih means that the size of the ‘char’ data type in Java is 2 bytes. This allows Java to support a vast range of characters from different languages and scripts, making it a popular choice for developing multilingual applications.

It is worth noting that the size of the ‘char’ data type in other programming languages may differ. For instance, in the C language, the size of the ‘char’ data type is 1 byte, which can only represent characters from the ASCII character set. Therefore, when working with different programming languages, it is important to understand the size and encoding of the ‘char’ data type to ensure compatibility and proper handling of character data.

To summarize, a ‘char’ data type in Java is 2 bytes as it uses 16-bit Unicode encoding to represent characters from various languages and scripts.

Source: hulft.com

Character Size in Bytes

In the C programming language, a character constant is of the int data type by default. This means that a character, which is represented by a single character constant, takes up 4 bytes of memory. This is becuse an int data type occupies 4 bytes of memory on most modern systems.

However, it is important to note that the size of a character may vary depending on the programming language, system architecture, and implementation. For example, in some programming languages, a character may only occupy 1 byte of memory.

It is also worth mentioning that the size of a character is different from the size of a string, which is a collection of characters. A string may occupy multiple bytes of memory depending on its length.

In the C programming language, a character constant takes up 4 bytes of memory by default, but the size of a character may vary depending on the programming language and system architecture.

Number of Bits in One Character

When it comes to character encoding, the number of bits required to represent a single character can vary depending on the encoding standard used. In the 8-bit ASCII encoding standard, which is commonly used in computers and electronic communication, a single ASCII character is represented using 8 bits, or 1 byte. However, it is worth noting that ASCII characters can actually be represented using only 7 bits, but the additional 8th bit is often used for error detection and correction.

On the other hand, in the ISO-8859-1 encoding standard, which is widely used in Western Europe and the Americas, each character is also represented using 8 bits, or 1 byte. This means that both ASCII and ISO-8859-1 characters require the same amount of space in memory or storage.

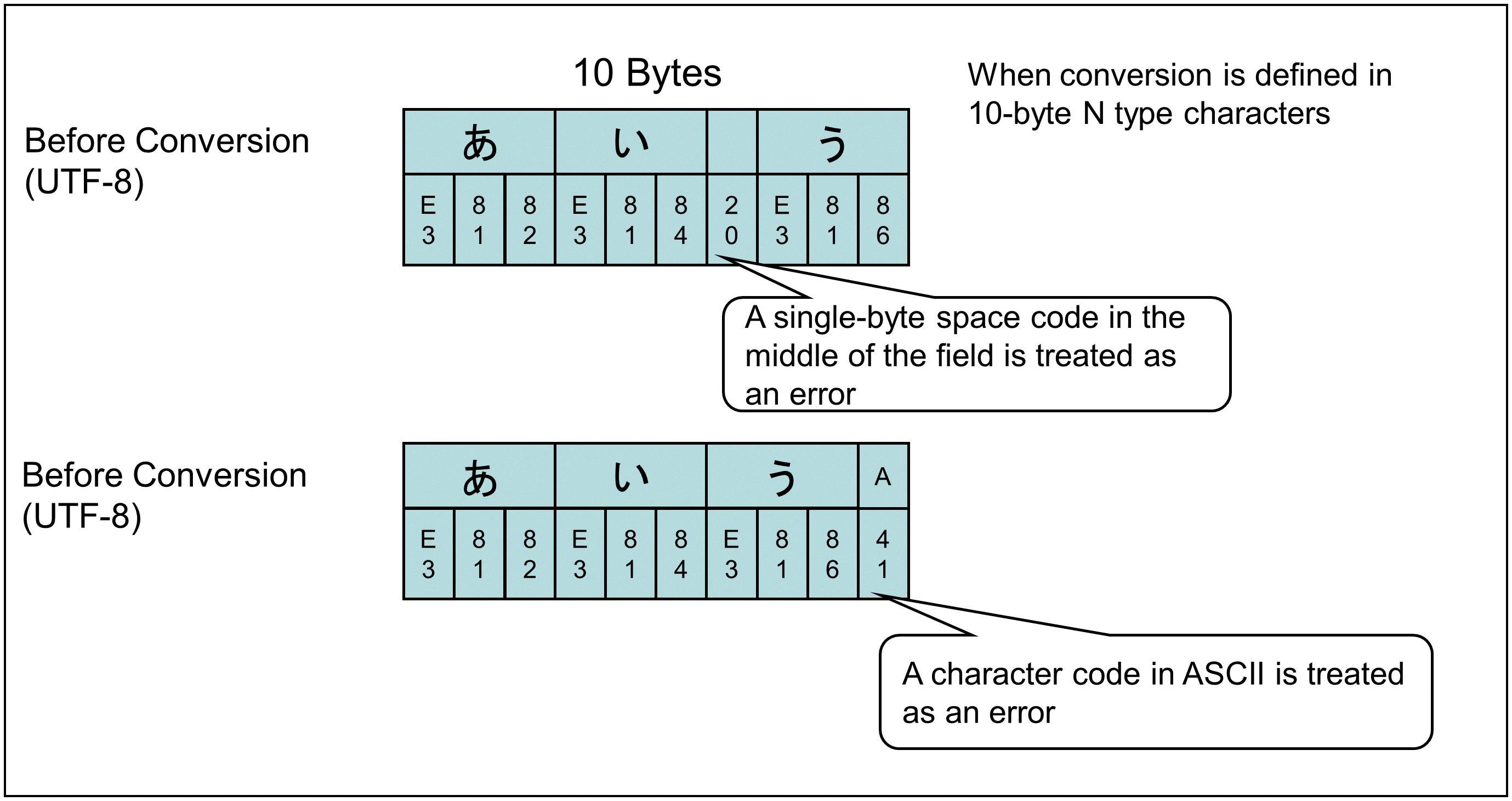

It is important to note that there are other character encoding standards that use different bit lengths for each character, such as UTF-8, which can use anwhere from 1 to 4 bytes to represent a single character depending on the character’s Unicode code point value. However, in general, the number of bits required to represent a single character can vary depending on the encoding standard used.

Is One Character Equal to One Byte?

The answer to the question “Is 1 char 1 byte?” is not a straightforward one. While it is true that in general, 1 byte is equal to 1 ASCII character, there are some exceptions to this rule.

First of all, it is important to understand what a byte is. A byte is a unit of digital information that consists of 8 bits. It is the smallest unit of storage on a computer and is used to represent a single character, such as a letter, number, or symbol.

ASCII (American Standard Code for Information Interchange) is a character encoding standard that assigns a unique numerical vlue to each character. In ASCII, each character is represented by a single byte. Therefore, in ASCII, 1 char is indeed equal to 1 byte.

However, there are other character encoding standards that use more than one byte to represent a single character. For example, Unicode is a character encoding standard that can use up to 4 bytes to represent a single character. This is because Unicode supports a much larger number of characters than ASCII, including characters from non-Latin scripts such as Chinese, Arabic, and Cyrillic.

While 1 byte is generally equal to 1 ASCII character, there are character encoding standards that use more than one byte to represent a single character. It is important to be aware of the encoding standard being used when working with digital information to ensure that the correct amount of storage space is allocated.

Conclusion

Bytes play a crucial role in computing and technology as a whole. They are the basic unit of storage and measurement used by computers and oher digital devices. Bytes are used to represent a wide range of data types, from characters and numbers to images and videos. Understanding the size and representation of bytes is essential for anyone working in the field of computing. As technology continues to advance, the importance of bytes will only continue to grow. By staying up-to-date with the latest developments and trends in byte usage, we can ensure that we are able to make the most of this fundamental unit of digital information.