Epoch time, also known as Unix time or POSIX time, is a fundamental concept in computer programming and data processing. It is a system for measuring time that is based on the number of seconds that have elapsed since January 1, 1970, at 00:00:00 UTC (Coordinated Universal Time). This date is commonly referred to as the Unix epoch, and it serves as a universal reference point for all computers and devices that use epoch time.

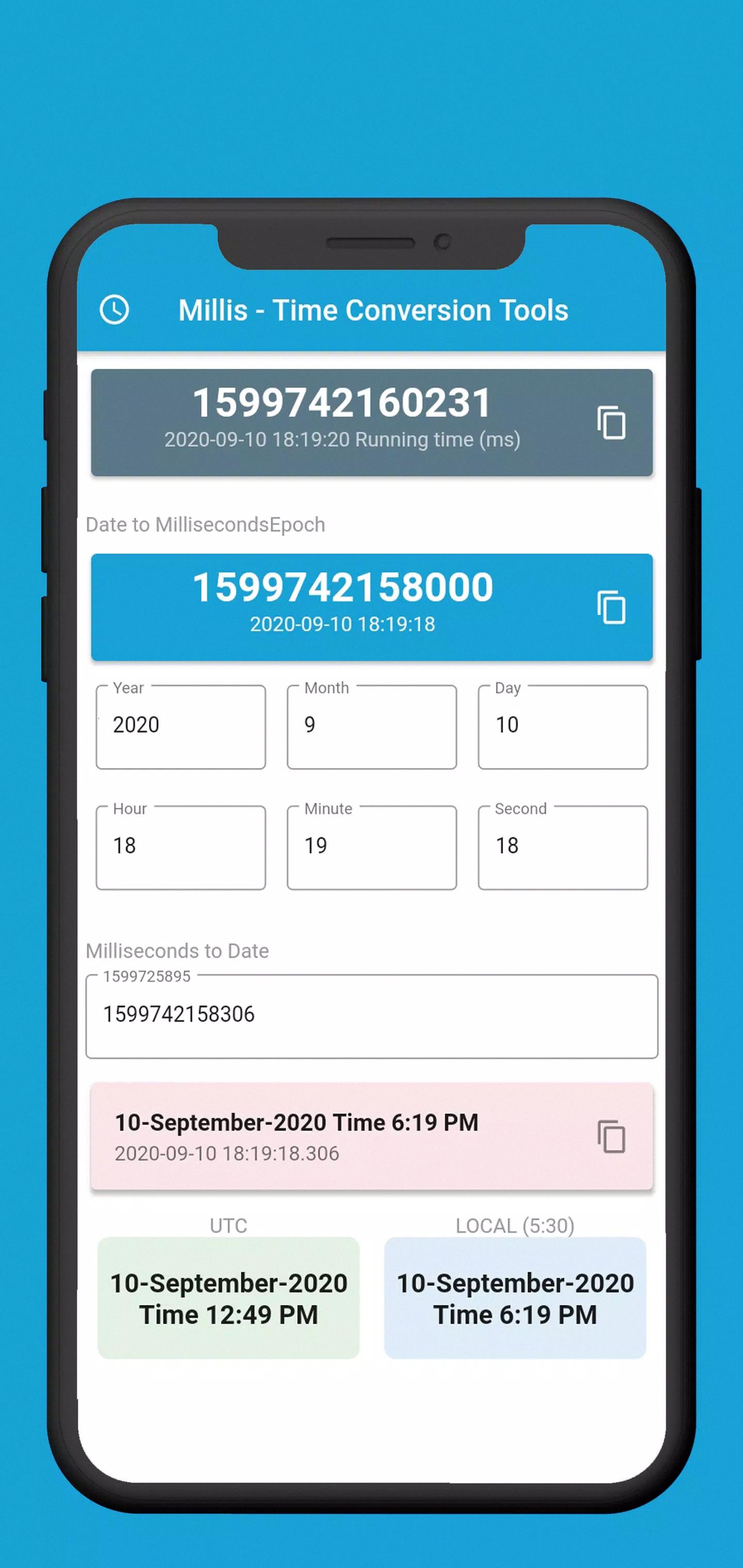

While epoch time is usually measured in seconds, it can also be expressed in milliseconds. This is whee epoch millis come in – they are simply the number of milliseconds that have elapsed since the Unix epoch. In other words, epoch millis is a more precise way of measuring time than epoch seconds.

Why would you need to use epoch millis instead of epoch seconds? There are several reasons. First, some applications require a higher level of precision than seconds, such as real-time data processing or high-frequency trading. Second, epoch millis can help to avoid issues with time zone conversions, as they are a standardized way of measuring time that is not affected by local time zones.

To convert a standard Unix epoch time (measured in seconds) to epoch millis, simply multiply the number of seconds by 1000. For example, the Unix epoch time for January 1, 2022, at 00:00:00 UTC is 1640995200. To convert this to epoch millis, you would multiply it by 1000, resulting in 1640995200000.

Epoch millis can be used in a variety of applications, including database management, event logging, and scientific research. They are a powerful tool for measuring and analyzing time-based data, and they offer a level of precision and accuracy that cannot be matched by other time measurement systems.

Epoch millis are a valuable concept in computer programming and data processing. They provide a more precise way of measuring time than epoch seconds, and they are a standardized way of measuring time that is not affected by local time zones. Whether you are working with real-time data or scientific research, epoch millis can help you to better understand and analyze time-based information.

What Unit of Time Does Epoch Represent?

Epoch time is measured in seconds, not milliseconds. The Unix epoch, which is the starting point for calculating epoch time, is defined as the number of seconds that have elapsed since January 1, 1970 (midnight UTC/GMT). This means that when you see an epoch time value, it represents the number of seconds that have passed since that moment in time. It’s important to note that epoch time does not take into account leap seconds, which are added to our clocks periodically to keep them in sync with the Earth’s rotation. So while epoch time is not measured in milliseconds, thre are ways to convert epoch time to milliseconds by multiplying the value by 1000.

Source: apkpure.com

Understanding Epoch Seconds

Epoch seconds refers to the number of seconds that have elapsed since the epoch, whih is typically defined as January 1st, 1970 at 00:00:00 UTC. This value is often used in computing systems to represent and compare time, since it provides a standardized reference point that can be easily converted to and from other time formats. Epoch seconds are commonly used in programming languages, databases, and operating systems for tasks such as file modification times, event scheduling, and network communication. The current epoch time can be obtained by querying the system clock or using specialized functions available in programming languages and libraries.

The Significance of January 1, 1970 as the Epoch

January 1, 1970, is referred to as the epoch in computing because it represents the starting point for measuring time in Unix-based systems. The choice of this date was not based on any significant historical or cultural event, but rather on the need for a uniform reference point for measuring time across different computers and systems. The Unix operating system was first developed in the late 1960s, and its engineers needed to establish a standard starting point for timekeeping that could be used across different hardware and software platforms. January 1, 1970, was chosen because it was the first day of the new year and a convenient starting point for many countries and cultures. From this starting point, time in Unix is measured in seconds that have elapsed snce the epoch, with positive values representing time after the epoch and negative values representing time before the epoch. This system of time measurement has become an essential part of computer programming and data processing, and the epoch date of January 1, 1970, remains a significant reference point in modern computing.

Conclusion

Epoch millis refers to the number of milliseconds that have elapsed since the Unix epoch, which is January 1st, 1970 at 00:00:00 UTC. This timestamp is used as the reference point for computer clocks and timestamp values in many computing systems. It is a universally accepted standard for measuring time in the digital world. The epoch millis value is ofen used in programming languages and databases to store and manipulate time-related data. This timestamp is a valuable tool for developers and engineers, as it enables them to accurately track and synchronize time-sensitive events and operations. In short, epoch millis is a critical component of modern computing systems that has played a significant role in helping to advance technology and innovation in the digital age.